Neutrino Physics and Machine Learning 2024

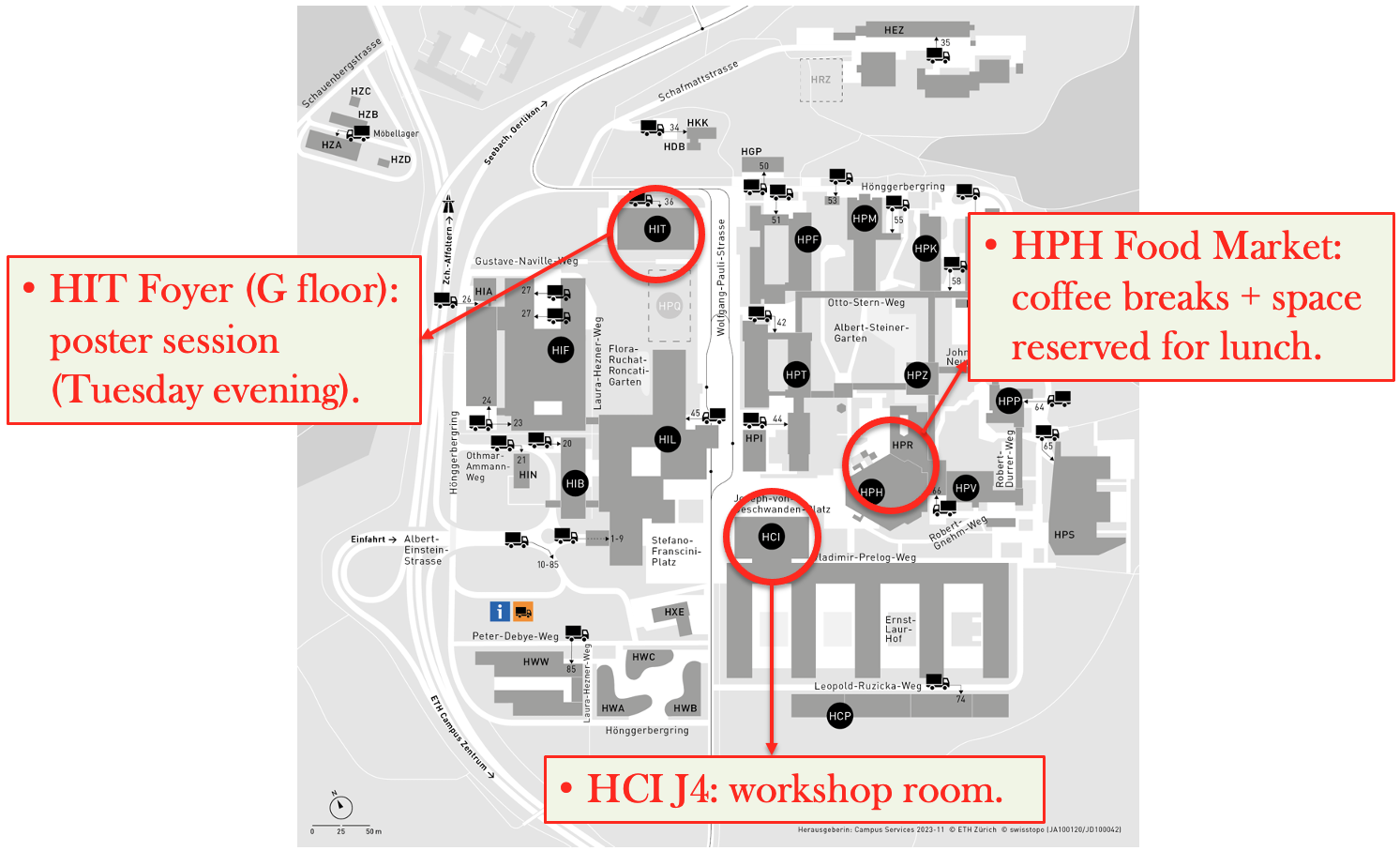

HCI J4

ETH Zurich

Machine Learning (ML) techniques have been adopted at all levels of applications, including experimental design optimization, detector operations and data taking, physics simulations, data reconstruction, and physics inference. Neutrino Physics and Machine Learning (NPML) is dedicated to identifying, reviewing, and building future directions for impactful research topics for applying ML techniques in Neutrino Physics.

We invite both individual speakers as well as representatives from a large collaboration in the neutrino community to share the development and applications of ML techniques. Speakers from outside neutrino physics are welcome to make contributions.

Registration: use this registration form.

Question form: use this form to submit questions for any speaker!

Fee: Students: 250 CHF (Payment Link for Students), Rest: 350 CHF (Payment Link for Non-Students).

Talk/Poster Request: please submit your contribution request in this Indico page (deadline passed on April 14).

NPML24 is designed as an on-site event to encourage interactive discussions. Nevertheless, all presentations will be accessible remotely on the Indico site. We thank you for your understanding.

Local Committee:

- Saúl Alonso-Monsalve (ETH Zurich)

- Marta Babicz (University of Zurich)

- Davide Sgalaberna (ETH Zurich)

- Leigh Whitehead (University of Cambridge)

- Jennifer Zollinger (ETH Zurich)

International Scientific Committee:

- Kazuhiro Terao (SLAC)

- Patrick de Perio (Kavli IPMU)

- Jianming Bian (UC Irvine)

- Adam Aurisano (University of Cincinnati)

- Nick Prouse (Imperial College London)

- Corey Adams (ANL)

- Taritree Wongjirad (Tufts University)

- Aobo Li (UC San Diego)

Organization Committee [contact]

-

-

8:30 AM

→

8:50 AM

Registration 20m HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland. -

8:50 AM

→

12:15 PM

Day 1 - Morning HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.JUNO/SNO+ Large Scintillator Detectors

- 8:50 AM

-

9:05 AM

Overview of machine learning applications in JUNO 25m

The Jiangmen Underground Neutrino Observatory (JUNO) is a next-generation neutrino experiment currently under construction in southern China. It is designed with a 20 kton liquid scintillator detector and 78% photomultiplier tube (PMT) coverage. The primary physics goal of JUNO is to determine the neutrino mass ordering and measure oscillation parameters with unprecedented precision. JUNO’s large mass and high PMT coverage provide a perfect scenario for the application of various machine learning techniques. In this talk, I present an overview of the recent progress of machine learning studies in JUNO, including waveform-level and event-level reconstruction, background rejection, and signal classification. Preliminary results with Monte Carlo simulations are presented, showing great potential in enhancing the detector’s performance as well as expanding JUNO’s physics capabilities beyond the traditional scope of large liquid scintillator detectors.

Speaker: Teng Li (Shandong University) -

9:30 AM

Q/A 10m

-

9:40 AM

Machine-Learning based photon counting for PMT waveforms and its application to the energy reconstruction of JUNO 15m

The Jiangmen Underground Neutrino Observatory (JUNO) is a state-of-the-art 20 kton liquid scintillator detector designed to achieve an unprecedented energy resolution of 3% @ 1 MeV. The energy resolution is of paramount importance for the measurement of neutrino mass ordering (NMO) through the study of reactor neutrinos at JUNO. A key factor contributing to the energy resolution in JUNO is the charge smearing of PMTs. This talk introduces a ML-based photon counting method for PMT waveforms and its application to the energy reconstruction of JUNO. By integrating the photon counting information into the charge-based likelihood function, this approach can partially mitigate the impact of the PMT charge smearing and improve the energy resolution by about 2% to 3% at different energies.

Speaker: Guihong Huang (Wuyi University) -

9:55 AM

Q/A 10m

-

10:05 AM

Interpretable machine learning approach for electron antineutrino selection in the JUNO experiment 15m

The Jiangmen Underground Neutrino Observatory (JUNO) is a neutrino experiment under construction with a broad physics program. The main goals of JUNO are the determination of the neutrino mass ordering and the high-precision measurement of neutrino oscillation properties with anti-neutrinos produced in commercial nuclear reactors. JUNO's central detector is an acrylic sphere 35.4 meters in diameter filled with 20 kt of liquid scintillator. It is equipped with photomultiplier tubes (PMTs) of two types: 17 612 20-inch PMTs and 25 600 3-inch PMTs. The central detector is designed to provide an energy resolution of 3% at 1 MeV.

JUNO detects electron antineutrinos via inverse beta decay (IBD). IBD reaction provides a pair of correlated events, thus a strong experimental signature to distinguish the signal from backgrounds. However, given the low cross-section of antineutrino interactions, the development of a powerful event selection algorithm that effectively discriminates between signal and background events is essential to most of JUNO’s physics goals. This talk presents a fully connected neural network as a powerful signal-background discriminator.

We also present the first interpretable analysis of the neural network approach for event selection in reactor neutrino experiments. This analysis provides insights into the decision-making process of the model and offers valuable information for improving and updating traditional event selection approaches.

Speaker: Arsenii Gavrikov (INFN-Padova + The University of Padova) -

10:20 AM

Q/A 10m

-

10:30 AM

Coffee break 30m

-

11:00 AM

Reconstruction of atmospheric neutrino’s directionality in JUNO with machine learning 15m

The Jiangmen Underground Neutrino Observatory (JUNO) is a next-generation large (20 kton) liquid-scintillator neutrino detector, designed to determine the neutrino mass ordering from its precise reactor neutrino spectrum measurement. Additionally, high-energy (GeV-level) atmospheric neutrino measurements could also improve its sensitivity to mass ordering via matter effects on oscillations, which depend on the directional (zenith angle) resolution of the incident neutrino. However, large unsegmented liquid scintillator detectors like JUNO are traditionally limited in their capabilities of measuring event directionality.

This contribution presents a machine learning approach for the directional reconstruction of atmospheric neutrinos in JUNO, which can be applied to other liquid scintillator detectors as well. In this method, several features relevant to event directionality is extracted from PMT waveforms and used as inputs to the machine learning models. Three independent models are used to perform reconstruction, each with its own unique approach for handling the same input features. Preliminary results based on Monte Carlo simulation show promising potential for this approach.Speaker: Dr Feng Gao (Université libre de Bruxelles (ULB)) -

11:15 AM

Q/A 10m

-

11:25 AM

Identification of atmospheric neutrino's flavor in JUNO with machine learning 15m

The Jiangmen Underground Neutrino Observation (JUNO), located at Southern China, is a multi-purpose neutrino experiment that consist of a 20-kton liquid scintillator detector. The primary goal of the experiment is to measure the neutrino mass ordering (NMO) and measure the relevant oscillation parameters to a high precision. Atmospheric neutrinos are sensitive to NMO via matter effects and can improve JUNO’s total sensitivity in a joint analysis with reactor neutrinos, in which a good capability of reconstructing atmospheric neutrinos are crucial for such measurements.

In this contribution, we present a machine learning approach for the particle identification of atmospheric neutrinos in JUNO. The method of feature extraction from PMT waveforms that are used as inputs to the machine learning models are detailed. Two strategies of utilising neutron capture information are also discussed. And preliminary results based on Monte-Carlo simulations will be presented. We demonstrate that using Machine Learning-based approach can achieve the required level of accuracy for oscillation-related physics measurements.Speaker: Wing Yan Ma (Shandong University, China) -

11:40 AM

Q/A 10m

-

11:50 AM

Deep learning approaches for fast event reconstruction in the SNO+ scintillator phase and beyond 15m

SNO+ is an operational multi-purpose neutrino detector located 2km underground at SNOLAB in Sudbury, Ontario, Canada. 780 tonnes of linear alkylbenzene-based liquid scintillator are observed by ~9300 photomultiplier tubes (PMTs) mounted outside the spherical scintillator volume. SNO+ has a broad physics program which will include a search for the neutrinoless double beta decay of $^{130}$Te.

Artificial neural networks are being developed for reconstruction tasks at SNO+, ingesting events which consist of an unordered set of PMT hit information. We present a comparison of these methods applied to the position reconstruction of point-like events, which has traditionally been approached by maximizing a complex likelihood based on PMT hit times. The networks we present include a custom, flexible two-part architecture consisting of a convolutional feature extractor and a simple feedforward network; and a transformer using events tokenised on a hit-by-hit basis. We find some performance gains compared to likelihood optimization while evaluating orders of magnitude faster.

Speakers: Cal Hewitt (University of Oxford), Mark Anderson (Queen's University) -

12:05 PM

Q/A 10m

-

12:15 PM

→

1:35 PM

Lunch break 1h 20m

-

1:35 PM

→

5:55 PM

Day 1 - Afternoon HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.ML based data reconstruction and analysis in NOvA, MicroBooNE, and ICARUS

-

1:35 PM

Identifying Neutrino Final States in MicroBooNE with a New Deep-Learning Based LArTPC Reconstruction Framework 15m

MicroBooNE, a Liquid Argon Time Projection Chamber (LArTPC) located in the $\nu_{\mu}$-dominated Booster Neutrino Beam at Fermilab, has been studying $\nu_{e}$ charged-current (CC) interaction rates to shed light on the MiniBooNE low energy excess. The LArTPC technology employed by MicroBooNE provides the capability to image neutrino interactions with mm-scale precision. Computer vision and other machine learning techniques are promising tools for image processing that could boost efficiencies for selecting $\nu_{e}$-CC and other rare signals while reducing cosmic and beam-induced backgrounds. The MicroBooNE experiment has been at the forefront of developing and testing such techniques for use in physics analyses. In this talk we overview a new deep-learning based MicroBooNE reconstruction framework that uses convolutional neural networks to locate neutrino interaction vertices, tag pixels with track and shower labels, and perform particle identification on reconstructed clusters. We will present studies characterizing the performance of these new tools and demonstrate their effectiveness through their use in an inclusive $\nu_{e}$-CC event selection.

Speaker: Matthew Rosenberg (Tufts University) -

1:50 PM

Q/A 10m

Submit your question here

-

2:00 PM

Using CNN for a Dark Trident Search at MicroBooNE 15m

We present a first search for dark-trident scattering in a neutrino beam using a data set taken with the MicroBooNE detector at Fermilab. Proton interactions in the neutrino target at the Main Injector produce neutral mesons, which could decay into dark-matter (DM) particles mediated via a dark photon A′. A convolutional neural network (CNN) is trained to identify interactions of the DM particles in the liquid-argon time projection chamber (LArTPC) exploiting its image-like reconstruction capability. The CNN architecture is based on a model for dense images with adaptations for LArTPCs. The output layer has two neurons that correspond to the probability for signal or background.

In the absence of a DM signal, we provide limits at the 90% confidence level on the coupling parameters of the model as a function of the dark-photon mass using the CNN outputs, excluding previously unexplored regions of parameter space.Speaker: Luis Mora Lepin -

2:15 PM

Q/A 10m

-

2:25 PM

Data-Driven Light Model for the MicroBooNE Experiment 20m

MicroBooNE is a short baseline neutrino oscillation experiment that employs a Liquid Argon Time Projection Chamber (LArTPC) together with an array of Photomultiplier Tubes (PMTs), which detect scintillation light. This light detection is necessary for providing a means to reject cosmic ray background and trigger on beam-related interactions. Thus, accurate modeling of the expected optical detector signal is critical. Previous light models used on MicroBooNE have been simulation-based, which limits accuracy related to certain regions of the detector as well as different data conditions during runs. We present the status of a data-driven light model that uses a neural network to map the light yield in the MicroBooNE detector, allowing for specific conditioning based on MicroBooNE data.

Speaker: Polina Abratenko (Tufts University) -

2:45 PM

Q/A 10m

-

2:55 PM

Particle Trajectory Reconstruction and Euclidian Equivariant Neural Networks 20m

Training neural networks for analyzing three-dimensional trajectories in particle detectors presents challenges due to the high combinatorial complexity of the data. Incorporating networks with Euclidean Equivariance could significantly reduce the reliance on data augmentation. To achieve Euclidean Equivariance, we construct neural networks that primarily represent data and perform convolutions as functions of spherical harmonics. Our primary focus is on data from neutrino experiments utilizing liquid argon time projection chambers.

Speaker: Omar Alterkait (Tufts University / IAIFI) -

3:15 PM

Q/A 10m

-

3:25 PM

Deep Learning applications for electron neutrino reconstruction in the ICARUS experiment 25m

The ICARUS T600 detector is a liquid argon time projection chamber (LArTPC) installed at Fermilab, aimed towards a sensitive search for possible electron neutrino excess in the 200-1000 MeV region. To investigate nue appearance signals in ICARUS, a fast and accurate algorithm for selecting electron neutrino events from a background of cosmic interactions is required. We present an application of the general-purpose deep learning based reconstruction algorithm developed at SLAC to the task of electron neutrino reconstruction in the ICARUS detector. We demonstrate its effectiveness using the ICARUS detector simulation dataset containing nue events and out-of-time cosmic interactions generated using the CORSIKA software. In addition, we compare the selection efficiency/purity and reconstructed energy resolution across different initial neutrino energy ranges, and discuss current efforts to improve reconstruction of low energy neutrino events.

Speakers: Dae Heun Koh (SLAC), Drielsma Francois (SLAC) -

3:50 PM

Q/A 10m

-

4:00 PM

Coffee break 30m

-

4:30 PM

Muon Neutrino Reconstruction and Neutral Pion Calibration with Machine Learning Techniques at the ICARUS Detector 25m

The ICARUS T600 Liquid Argon Time Projection Chamber (LArTPC) detector is the far detector of the Short Baseline Neutrino (SBN) Program located at Fermilab National Laboratory (FNAL). The data collection for ICARUS began in May 2021, utilizing neutrinos from the Booster Neutrino Beam (BNB) and the Neutrinos at the Main Injector off-axis beam (NuMI). The SBN Program has been designed to investigate the observed neutrino anomalies e.g. the former electron neutrino excess from the LSND experiment and the more recent MiniBooNE anomaly. To analyze collected neutrino data, we utilize two methods of event reconstruction: (1) the Pandora multi-algorithm approach to automated pattern recognition, and (2) an approach making use of machine learning (ML). The latter of two reconstruction methods folds in 3D voxel-level feature extraction using sparse convolutional neural networks and particle clustering using graph neural networks to produce outputs suitable for physics analyses. This presentation will summarize the performance of a high-purity and high-efficiency end-to-end machine learning-based selection of muon neutrinos from the BNB and highlight studies of electromagnetic shower reconstruction from a neutral pion selection.

Speaker: Daniel Carber (Colorado State University, Fort Collins) -

4:55 PM

Q/A 10m

-

5:05 PM

Deep Learning in NOvA 15m

The NOvA experiment uses the ~1 MW NuMI beam from Fermilab to study neutrino oscillations: electron neutrino appearance and muon neutrino disappearance in a baseline of 810 km, with a 300-ton near detector and a 14-kiloton far detector. NOvA was the first experiment in high-energy physics to apply convolutional neural networks to classify neutrino interactions and composite particles in a physics measurement. Currently, NOvA is crafting new deep-learning techniques to improve interpretability, robustness, and performance for future physics analyses. This talk will cover the advancements in deep-learning-based reconstruction methods utilized in NOvA.

Speaker: BARBARA YAEGGY (University of Cincinnati) -

5:20 PM

Q/A 10m

-

5:30 PM

Transformer Network for Event/Particle Identification and Interpretability at NOvA 15m

The NOvA experiment is a long-baseline accelerator neutrino experiment utilizing Fermilab's upgraded NuMI beam. It measures the appearance of electron neutrinos and the disappearance of muon neutrinos at its Far Detector in Ash River, Minnesota. NOvA is the first neutrino experiment to use convolutional neural networks (CNNs) for event identification and reconstruction. Recently, we introduced a transformer network—commonly used in large language models like ChatGPT—for simultaneous event classification and final state particle identification at NOvA. This neural network also incorporates Sparse CNNs into its architecture. The attention mechanism in the transformer is used to diagnose the neural network and study correlations between inputs and outputs, thereby providing interpretability to the neural network. In this talk, I will discuss the architecture, identification performance, and interpretability of the NOvA transformer neural network.

Speaker: Jianming Bian (University of California, Irvine) -

5:45 PM

Q/A 10m

-

1:35 PM

-

6:00 PM

→

7:00 PM

Reception - Poster Session HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.

-

8:30 AM

→

8:50 AM

-

-

9:00 AM

→

12:25 PM

Day 2 - Morning HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.Rare event search in the 0nuBB and Dark Matter experiments

-

9:00 AM

ML applications in radiological image analysis for cancer research 25m

Imaging is one of the main pillars of clinical protocols for cancer care that provides essential non-invasive biomarkers for detection, diagnosis and response assessment. The development of Artificial Intelligence (AI) tools, and Machine Learning (ML) in particular, have proven potential to transform the analysis of radiological images, by significantly reducing processing time, by increasing the reproducibility of measurements and by improving the sensitivity of tumour detection compared to the standard visual interpretation, leading to cancer early detection. In this talk I will highlight some of the work done in our Radiogenomics and Quantitative Image Analysis group, including examples of ML strategies to predict response to chemotherapy treatment, and highlighting areas in which there is potential for synergetic collaborations to bring state-of-the-art ML methods developed in neutrino physics to the clinic.

Speaker: Lorena Escudero (University of Cambridge) -

9:25 AM

Q/A 10m

-

9:35 AM

Topological Event discrimination using Deep Convolutional Neural Networks for the NEXT Experiment 15m

The NEXT experiment is an international collaboration that searches for the neutrinoless double-beta decay using $^{136}\mathrm{Xe}$. It features an entirely gaseous TPC, which allows for the resolution of individual electron tracks. This opens up the possibility to employ machine learning techniques to distinguish between signal and background events based on their topological signature. In this talk, I will present previous efforts in using convolutional neural networks (CNNs) for event classification in NEXT, as well as ongoing work in trying the same with Graph Neural Networks (GNNs). In an earlier study, CNNs were able to successfully identify electron-positron pair production events, which exhibit a topology similar to that of a neutrinoless double-beta decay event. These events were produced in the NEXT-White high-pressure xenon TPC using 2.6-MeV gamma rays from a $^{228}\mathrm{Th}$ calibration source. The use of CNNs offers significant improvement in signal efficiency and background rejection when compared to previous non-CNN-based analyses. The current work on GNNs, while still in development, aims to surpass these results and move on to data from the upcoming NEXT-100 detector.

Speaker: Fabian Kellerer (Universitat de Valencia) -

9:50 AM

Q/A 10m

-

10:00 AM

Signal Denoising with Machine Learning for LEGEND Data 15m

The LEGEND experiment is dedicated to the search for neutrinoless double beta decay using $^{76}Ge$-enriched High Purity Germanium detectors. While LEGEND has excellent energy resolution and ultra-low background levels, noise from readout electronics can make identifying events of interest more challenging. An efficient signal denoising algorithm can further enhance LEGEND’s energy resolution, background rejection techniques, and help identify low-energy events where the signal-to-noise ratio is small. In this talk, I will present several promising machine-learning based approaches, such as Noise2Noise with autoencoder, to effectively remove electronic noise from LEGEND detectors signals without the need for simulated data (or ground truth). Such a denoising algorithm can also be extended beyond the LEGEND experiment and is broadly applicable to other detector technologies.

This work is supported by the U.S. DOE and the NSF, the LANL, ORNL and LBNL LDRD programs; the European ERC and Horizon programs; the German DFG, BMBF, and MPG; the Italian INFN; the Polish NCN and MNiSW; the Czech MEYS; the Slovak SRDA; the Swiss SNF; the UK STFC; the Russian RFBR; the Canadian NSERC and CFI; the LNGS, SNOLAB, and SURF facilities.

Speaker: Tianai Ye (Queen's University) -

10:15 AM

Q/A 10m

-

10:25 AM

Coffee break 35m

-

11:00 AM

Probabilistic Position Reconstruction in the XENONnT Experiment 15m

The XENONnT detector is a dual-phase xenon time projection chamber to search for rare low-energy events. While its main purpose is the direct detection of Dark Matter, XENONnT is also sensitive to neutrino interactions for example from solar 8B neutrinos. To fully utilize the fiducialization and background reduction capabilities of the XENONnT detector, it is important to know the exact position of events in the detector. The event position reconstruction is commonly performed by a combination of different neural networks (NNs). Like most machine learning models, these NNs output a singular point in the output space, here the horizontal plane of the detector. In this talk I will present a modification of the NNs, which changes their output from a singular point to a probability density function (PDF) that spans the complete output space. The resulting PDF can be analyzed to learn about trends and biases in the position reconstruction, ultimately leading to an improved signal to background discrimination: The parameters of the PDF can be used to filter for potentially incorrectly reconstructed events. Additionally, the position uncertainty can be propagated through the full event reconstruction chain, providing a more accurate estimation of systematic uncertainties of the experiment.

Speaker: Sebastian Vetter (Karlsruhe Institute of Technology) -

11:15 AM

Q/A 10m

-

11:25 AM

Enhancing event discrimination in LEGEND-200 with transformer 25m

At the forefront of investigating neutrinoless double beta decay (0νββ) using 76Ge-enriched detectors, the LEGEND experiment is driven by the quest to unravel the mysteries of neutrinos and explore physics beyond the Standard Model. In its initial phase, LEGEND-200 deploys 200 kg of germanium at INFN Gran Sasso National Laboratory, aiming for a discovery half-life sensitivity surpassing 10$^{27}$ years. The subsequent phase envisions the operation of 1000 kg of germanium, pushing the sensitivity threshold beyond 10^${28}$ years.

An integral component of the LEGEND experiment is the meticulous analysis of acquired waveforms, leveraging Pulse Shape Discrimination (PSD) to identify Single-Site Events (SSE) and Multi-Site Events (MSE), essential for isolating potential 0$\nu\beta\beta$ candidates. SSE, characterized by energy deposition at a specific point, contrast with MSE, where energy is distributed across multiple locations within a single Ge detector, leading to complex waveforms with overlapping rising edges and distinctive features. Each of these locations contributes to the overall waveform, resulting in a nuanced signal pattern.

In addition to PSD, this study introduces deep learning, specifically transformers, to enhance event discrimination. Abundant training data, derived from weekly calibration runs of LEGEND-200, facilitates the transformer model's ability to learn from sequential data. By optimizing event discrimination, especially in the low-energy range, this innovative approach contributes significantly to the precision of the LEGEND experiment. The combination of standard analysis tools and deep learning techniques positions LEGEND at the forefront of experimental endeavours, promising a deeper understanding of neutrino physics and the potential for breakthroughs in the search for exotic physics beyond the Standard Model.

Speaker: Marta Babicz (University of Zurich) -

11:50 AM

Q/A 10m

-

12:00 PM

Anomaly aware machine learning for dark matter direct detection at DARWIN 15m

This talk presents a novel approach to dark matter direct detection using anomaly-aware machine learning techniques in the DARWIN next-generation dark matter direct detection experiment. I will introduce a semi-unsupervised deep learning pipeline that falls under the umbrella of generalized Simulation-Based Inference (SBI), an approach that allows one to effectively learn likelihoods straight from simulated data, without the need for complex functional dependence on systematics or nuisance parameters. I also present an inference procedure to detect non-background physics utilizing an anomaly function derived from the loss functions of the semi-unsupervised architecture. The pipeline's performance is evaluated using pseudo-data sets in a sensitivity forecasting task, and the results suggest that it offers improved sensitivity over traditional methods.

Speaker: Andre Scaffidi (SISSA) -

12:15 PM

Q/A 10m

-

9:00 AM

-

12:25 PM

→

1:35 PM

Lunch break 1h 10m

-

1:35 PM

→

5:55 PM

Day 2 - Afternoon HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.KATRIN and ML based end-to-end data reconstruction in SBN and DUNE-ND: LArTPCs with high pile-ups

-

1:35 PM

Latest results from the KATRIN experiment and insights into the neural network approach 25m

The Karlsruhe Tritium Neutrino (KATRIN) experiment probes the effective electron anti-neutrino mass by precisely measuring the tritium beta-decay spectrum close to its kinematic endpoint.

A world-leading upper limit of $0.8 \,$eV$\,$c$^{-2}$ (90$\,$\% CL) has been set with the first two measurement campaigns.

Subsequent improvements in operational conditions and a substantial increase in statistics allow us to expand this reach.

This talk features the latest KATRIN results and provides insight into the neural network approach used to perform the computationally challenging analysis. Moreover, a short outlook on the upcoming Bayesian analysis will be given.This work received funding from the European Research Council under the European Union Horizon 2020 research and innovation programme, and is supported by the Max Planck Computing and Data Facility, the Excellence Cluster ORIGINS, the ORIGINS Data Science Laboratory and the SFB1258.

Speaker: Alessandro Schwemmer (Technical University of Munich, Germany) -

2:00 PM

Q/A 10m

-

2:10 PM

End-to-End, Machine-Learning-Based Data Reconstruction Chain for LArTPC detectors 25m

Recent leaps in Computer Vision (CV), made possible by Machine Learning (ML), have motivated a new approach to the analysis of particle imaging detector data. Unlike previous efforts which tackled isolated CV tasks, this talk introduces an end-to-end, ML-based data reconstruction chain for Liquid Argon Time Projection Chambers (LArTPCs), the state-of-the-art in precision imaging at the intensity frontier of neutrino physics. The chain is a multi-task network cascade which combines voxel-level feature extraction using Sparse Convolutional Neural Networks and particle superstructure formation using Graph Neural Networks. Each individual algorithm incorporates physics-informed inductive biases, while their collective hierarchy enforces a causal relashionship between them. The output is a comprehensive description of an event that may be used for high-level physics inference. The chain is end-to-end optimizable, eliminating the need for time-intensive manual software adjustments. The short baseline neutrino (SBN) program aims to clarify neutrino flux anomalies observed in previous experiments by leveraging two LArTPCs (ICARUS and SBND) placed at different distances relative to the booster neutrino beam (BNB) target. This presentation highlights the progress made in leveraging the ML-based reconstruction chain to achieve the SBN physics goals which will pave the way for the future success of the Deep Underground Neutrino Experiment (DUNE).

Speaker: Francois Drielsma (SLAC) -

2:35 PM

Q/A 10m

-

2:45 PM

One Neural Network, Two Detector Mediums, Three Detection Regions: Multi-detector Machine Learning with DUNE’s Near Detector Prototype 15m

The DUNE near detector is employing new technologies in Liquid Argon Time Projection Chamber (LArTPC) detection methods, including a 3D charge pixel readout, and is modularized into a 5x7 rectangular grid of TPCs. A smaller 2x2 prototype is nearing testing in the NuMI neutrino beam at Fermilab and we are faced with reconstructing the modularized, 3D LArTPC images. While a chain of machine learning reconstructions have been developed for the clustering charge inputs and identifying particle signatures in the LArTPC, it does not take in any information from the solid scintillator endcap detectors that collect particle spills up and downstream of the 2x2 volume. This work explores combining input from both detector mediums into the machine learning reconstruction to identify particles that cross through both of the multi-detector regions.

Speaker: Jessie Micallef (Institute for AI and Fundamental Interactions (MIT & Tufts)) -

3:00 PM

Q/A 10m

-

3:10 PM

Michel Electron Reconstruction Using a Novel Deep-Learning-Based Multi-Level Event Reconstruction in ICARUS 15m

The ICARUS detector, situated on the Fermilab beamline as the Far Detector of the SBN (Short Baseline Neutrino) program, is the first large-scale operating LArTPC (Liquid Argon Time Projection Chamber). The mm-scale spatial resolution and precise timing of LArTPC enable voxelized 3D event reconstruction with high precision. A scalable deep-learning (DL)-based event reconstruction framework for LArTPC data has been developed, incorporating suitable choices of sparse tensor convolution and graph neural networks to fully utilize LArTPC's high-resolution imaging capabilities. Michel electrons, which are daughter electrons from the decay-at-rest of cosmic ray muons, have an energy spectrum that is theoretically well understood. The reconstruction of Michel electrons in LArTPC can demonstrate the capability of the system for low-energy electron reconstruction. This poster presents an end-to-end, deep-learning-based approach for Michel electron reconstruction in ICARUS.

Speaker: Yeon-jae Jwa (SLAC) -

3:25 PM

Q/A 10m

-

3:35 PM

Generative Modeling for LArTPC Images 15m

Generating simulation data for future and current LArTPC experiments requires addressing several challenges, such as reducing computation time and the expression of detector model uncertainties. Inspired by the success of recently developed generative models to produce complex, high-dimensional data such as natural images, we are exploring how these methods might be applied to LArTPCs. Initial studies of denoising diffusion probabilistic models (DDPMs) have been able to successfully reproduce simulated 2D LArTPC images. This generative model approach produces high-fidelity images of track and shower particle event types that demonstrate realistic physics metrics. We continue to explore various applications for physics analyses across different datasets. In this talk, I will discuss our methodology, quality metrics, and direction of future work with generative modeling for LArTPC physics.

Speaker: Zeviel Imani (Tufts University / IAIFI) -

3:50 PM

Q/A 10m

-

4:00 PM

Coffee break 30m

-

4:30 PM

Deep Generative Models for Neutrino Physics 25m

Deep generative models have entered the mainstream with transformer-based chatbots and diffusion-based image generation models. We showcase the utility of such models with several studies focused on different aspects of neutrino physics.

Firstly, we present a method based on flow matching suitable for cross-section measurements in the precision era of neutrino physics. Using flow matching, we train a continuous normalizing flow and we show that it captures the underlying density described by variations of systematic parameters faithfully.

Secondly, we present an autoregressive transformed based method for paired generative tasks in neutrino physics. Unlike traditional transformers we frame the problem from a continuous perspective by predicting each dimension using a transformed mixture of Gaussians, allowing us to impose physically motivated constraints.

Finally, we show initial results on a diffusion-based 3D model for the generation of LArTPC events. We show ways of enhancing the scalability of the proposed method by utilizing hierarchical generation.

Speaker: Radi Radev (CERN) -

4:55 PM

Q/A 10m

-

5:05 PM

Machine-Learning-Based Data Reconstruction Chain for the Short Baseline Near Detector 25m

The Short-Baseline Near Detector (SBND) is a 100-ton scale Liquid Argon Time Projection Chamber (LArTPC) neutrino detector positioned in the Booster Neutrino Beam (BNB) at Fermilab, as part of the Short-Baseline Neutrino (SBN) program. Recent inroads in Computer Vision (CV) and Machine Learning (ML) have motivated a new approach to the analysis of particle imaging detector data. SBND data can therefore be reconstructed using an end-to-end, ML-based data reconstruction chain for LArTPCs. The reconstruction chain is a multi-task network cascade which combines point-level feature extraction using Sparse Convolutional Neural Networks (CNN) and particle superstructure formation using Graph Neural Networks (GNN). We demonstrate the expected reconstruction performance on SBND.

Speaker: Brinden Carlson (University of Florida) -

5:30 PM

Q/A 10m

-

5:40 PM

Public Data Challenge 10m

Large-scale public datasets have been always a key to accelerate research and enable new discoveries in the machine learning research community. We propose to build a public dataset repository for the experimental neutrino physics community, which will be a new machine learning research hub that connects researchers from multiple domains including neutrino physics, machine learning, and more. Furthermore, the public data repository will make our research more accessible and transparent. However, there are also challenges. Along with each dataset, science challenges must be described with clarity with clearly defined performance metrics that drives R&D of new techniques. Moreover, those datasets must be versioned and maintained over time, which takes human effort on top of the technical needs such as a large-scale storage space. In this talk, we discuss these challenges and ask for the community inputs in order to make this repository serve the best for experimental neutrino physics.

Speaker: Kazuhiro Terao (SLAC) -

5:50 PM

Q/A 5m

-

1:35 PM

-

9:00 AM

→

12:25 PM

-

-

9:00 AM

→

12:25 PM

Day 3 - Morning HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.Machine learning based data reconstruction and analysis at the DUNE far detector

-

9:00 AM

A novel approach in the Pandora multi-algorithm reconstruction to tackle challenging topologies at DUNE 15m

The Deep Underground Neutrino Experiment (DUNE) will measure all long-baseline neutrino oscillation parameters, including the CP-violating phase and the neutrino mass ordering, in one single experiment. DUNE will also study astrophysical neutrinos and perform a broad range of new physics searches. This ambitious programme is enabled by the very high-resolution imaging capabilities of Liquid-Argon Time-Projection Chambers (LArTPCs). In order to fully exploit the LArTPC capabilities, sophisticated reconstruction techniques are required to tackle a wide range of challenging event topologies that have a critical impact on the flagship goals at DUNE, such as overlapping photon showers from neutral pions. This talk will describe expanding the multi-algorithm Pandora reconstruction with a novel reclustering approach that capitalises on deep learning techniques such as Graph Neural Networks to tackle these topologies and boost the pattern recognition performance.

Speaker: Maria Brigida Brunetti (University of Warwick) -

9:15 AM

Q/A 10m

-

9:25 AM

Machine Learning Approaches to Particle Identification in the DUNE Far Detector 15m

One of the primary oscillation physics goals of the Deep Underground Neutrino Experiment (DUNE) far detector (FD) is the measurement of CP violation in the neutrino sector. To achieve this, DUNE plans to employ large-scale liquid-argon time-projection chamber technology to capture neutrino interactions in unprecedented detail. Such fine-grain images demand a highly sophisticated automated reconstruction software such as Pandora to unlock the potential for a highly efficient and pure selection of charge-current (CC) muon/electron neutrino interactions. This talk presents the Pandora-based CC muon/electron neutrino interaction selection and explores its employed particle-identification methods, which range from simple boosted-decision trees to more complex deep learning approaches. This work illustrates the reconstruction-to-analysis continuum, via which specific Pandora reconstruction improvements are motivated and targeted, moving DUNE ever closer to uncovering the mysteries of neutrinos.

Speaker: Isobel Mawby (Lancaster University) -

9:40 AM

Q/A 10m

-

9:50 AM

NuGraph2: A Graph Neural Network for Neutrino Event Reconstruction 25m

Neutrino experiments are set to probe some of the most important open questions in physics, from CP violation and the nature of dark matter. The technology of choice for many of these experiments is the liquid argon time projection chamber (LArTPC). In current LArTPC experiments, reconstruction performance often represents a limiting factor for the sensitivity. New developments are therefore needed to unlock the full potential of LArTPC experiments.

NuGraph2 is a state of the art Graph Neural Network for reconstruction of data in LArTPC experiments [https://arxiv.org/abs/2403.11872]. NuGraph2 utilizes a heterogeneous graph structure, with separate subgraphs of 2D nodes (hits in each plane) connected across planes via 3D nodes (space points). The model provides a consistent description of the neutrino interaction across all planes. NuGraph2 is a multi-purpose network, with a common message-passing attention engine connected to multiple decoders with different classification or regression tasks. These include the classification of detector hits according to the particle type that produced them (semantic segmentation) and the separation of hits from the neutrino interaction from hits due to noise or cosmic-ray background. Additional decoders are being developed, performing tasks such as the regression of the neutrino interaction vertex position.

Performance results will be presented based on publicly available samples from MicroBooNE. These include both physics performance metrics, achieving 95% accuracy for semantic segmentation and 98% classification of neutrino hits, as well as computational metrics for training and for inference on CPU or GPU. The status of the NuGraph integration in the LArSoft software framework will be presented, as well as initial studies about model interpretability and injection of domain knowledge.

Speaker: Giuseppe Cerati (Fermilab) -

10:15 AM

Q/A 10m

-

10:25 AM

Coffee break 35m

-

11:00 AM

NuGraph3: Towards Full LArTPC Reconstruction using GNNs 15m

The highly detailed images produced by liquid argon time projection chamber (LArTPC) technology hold the promise of an unprecedented window into neutrino interactions; however, traditional reconstruction techniques struggle to efficiently use all available information. This is especially true for complicated interactions produced by tau neutrinos, which are typically large, consist of many tracks, and differ from other interactions largely by subtle angular differences.

NuGraph2, the Exa.TrkX Graph Neural Network (GNN) for reconstruction of LArTPC data is a message-passing attention network over a heterogeneous graph structure, with separate subgraphs of 2D nodes (hits in each plane) connected across planes via 3D nodes (space points). The model provides a consistent description of the neutrino interaction across all planes. The GNN performed a semantic segmentation task, classifying detector hits according to the particle type that produced them, achieving ~95% accuracy when integrated over all particle classes.

Based on this success, we are building a new network, NuGraph3, which will generalize NuGraph2's structure to a hierarchical message-passing attention network. The lowest layer will consist of the same subgraphs of 2D nodes that NuGraph2 operates on. Higher layers will be dynamically generated through a learned metric space embedding. This will allow the network to build higher level representations out of low level hits. After iterative refinement, the hierarchical structure will reflect a particle tree reconstruction of each event. This hierarchical structure also provides a natural way to construct event level features for use in reconstructing quantities like vertex position and neutrino interaction classification. We will present preliminary work building particle clusters, and compare semantic segmentation results with and without hierarchical message passing.

Speaker: Adam Aurisano (University of Cincinnati) -

11:15 AM

Q/A 10m

-

11:25 AM

Vertex-finding in a DUNE far-detector using Pandora deep-learning 15m

The Deep Underground Neutrino Experiment will operate large-scale Liquid-Argon Time-Projection Chambers at the far site in South Dakota, producing high-resolution images of neutrino interactions. Extracting the maximum value from the images requires sophisticated pattern-recognition to interpret detector signals as physically meaningful objects for physics analyses. Identifying the neutrino interaction vertex is critical because subsequent algorithms depend on this to identify and separate individual primary particles. In this talk I will present a UNet within the Pandora pattern-recognition framework for identifying the interaction vertex and look at the robustness of the network to input variations motivated by alternative nuclear models.

Speaker: Andrew Chappell (University of Warwick) -

11:40 AM

Q/A 10m

-

11:50 AM

Enhancing Liquid Argon TPCs Performance in Low-Energy Physics Classification Problems with Quantum Machine Learning 25m

Deep Learning (DL) techniques for background event rejection in Liquid Argon Time Projection Chambers (LArTPCs) have been extensively studied for various physics channels [1,2], yielding promising results. However, the potential of massive LArTPCs in the low-energy regime remains to be fully exploited, particularly in the classification of few-hits events that encode information hardly accessible to conventional algorithms.

In this contribution, we highlight the performance of Deep Learning (DL)-based [3] and, especially, Quantum Machine Learning (QML)-based background mitigation strategies to enhance the sensitivity of kton-scale LArTPCs for rare event searches in the few-MeV energy range. We emphasize their potential in the search for neutrinoless double beta decay (0νββ) of the 136Xe isotope within the Deep Underground Neutrino Experiment (DUNE), focusing on the DUNE Phase II detectors (“Module of Opportunity”). These low-energy events generate very short, undersampled tracks in LArTPCs that are difficult to analyze.

We present the application of novel QML algorithms, particularly Quantum Support Vector Machines (QSVMs) [4], for addressing this challenging classification task and compare it to deterministic algorithms and Deep Learning (DL) approaches like Convolutional Neural Networks and Transformers. We further highlight the weaknesses and strengths of each method.

QSVMs exploit quantum computation to map original features into a higher-dimensional vector space so that the resulting hyperplane would allow better separation within classes. The choice of this transformation called feature map is critical, and results in a positive, semidefinite scalar function called kernel. Quantum kernels can be implemented on modern intermediate-scale noisy commercial quantum processors and provide evident advantage where the required feature transformation is intractable classically.

In our work, QSVMs demonstrate competitive performance but require careful design of their kernel function. Optimizing a Quantum Kernel for a specific classification task is an open problem in QML. We provide a strategy to address this challenge through powerful meta-heuristic genetic optimization algorithms, enabling us to discover Quantum Kernel functions tailored to both the dataset and the quantum chip in use.

References

[1] DUNE Collaboration, Phys. Rev. D. 102, 092003 (2020).

[2] MicroBooNE Collaboration, Phys. D. 103, 092003 (2021).

[3] R. Moretti et al., e-print arXiv:2305.09744 (2023).

[4] Havlíček, V et al., Nature. 567, 209–212 (2019).Speaker: Roberto Moretti (INFN - Sezione di Milano Bicocca) -

12:15 PM

Q/A 10m

-

9:00 AM

-

12:25 PM

→

1:25 PM

Lunch break 1h

-

1:25 PM

→

5:55 PM

Day 3 - Afternoon HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.Machine learning based data reconstruction and analysis in ring imaging Cherenkov and highly-segmented optical detectors

-

1:25 PM

Contrastive Learning for Robust Representations of Neutrino Data 25m

A key challenge in the application of deep learning to neutrino experiments is overcoming the discrepancy between data and Monte Carlo simulation used in training. In order to mitigate bias when deep learning models are used as part of an analysis they must be made robust to mismodelling of the detector simulation. We demonstrate that contrastive learning can be applied as a pre-training step to minimise the dependence of downstream tasks on the detector simulation. This is achieved by the self-supervised algorithm of contrasting different simulated views and augmentations of the same event during the training. The contrastive model can then be frozen and the representation it generates used as training data for classification and regression tasks. We use sparse tensor networks to apply this method to 3D LArTPC data. We show that the contrastive pre-training produces representations that are robust to shifts in the detector simulation both in and out of sample.

Speaker: Alex Wilkinson (University College London) -

1:50 PM

Q/A 10m

-

2:00 PM

Enhanced Event Reconstruction at Hyper-Kamiokande using Graph Neural Networks and Prospect of the Impact to CP Violation Search 25m

Hyper-Kamiokande (HK) is the next generation neutrino observatory in Japan and the successor of Super-Kamiokande (SK) detector. It has been designed to extend the legacy of its predecessor into new realms of neutrino physics ranging from MeV (Solar or Supernovae neutrino) to several GeV energy scales, and in particular, discover CP violation for the very first time in the lepton sector. To reach these ambitious goals, HK will rely on a eight times larger target volume, enhanced photodetector capacity compared to SK, as well as precise calibration devices, setting the stage for breakthroughs in precision and computational performance.

In this context, the adoption of machine learning techniques for event reconstruction is tailored to exploit the full potential of HK. Specifically, graph neural networks (GNNs) allow for a more precise and faster event reconstruction with non-Euclidean geometry, ranging from low to high-energy events. In this talk, we will first present an overview of the different reconstruction algorithms used to reconstruct high-energy (GeV) events in HK. We will then show the very first and promising results of our GNN-based algorithm, and demonstrates it already boost the HK physics potential in the CP violation discovery range (GeV scale). We will conclude by presenting the prospects for the future HK reconstruction beyond the GeV scale.

Speaker: Christine Quach (LLR - CNRS) -

2:25 PM

Q/A 10m

-

2:35 PM

A Comprehensive Insight into Machine Learning Techniques in KM3NeT 15m

KM3NeT/ARCA and KM3NeT/ORCA are the new generation of neutrino telescopes located in the depths of the Mediterranean Sea. Each comprises a grid of optical sensors that capture the Cherenkov light emitted by charged particles produced in neutrino interactions. KM3NeT/ARCA, sensitive to interactions with energies ranging from TeV to PeV, focuses on cosmic neutrinos, while KM3NeT/ORCA investigates atmospheric neutrino oscillations in the

GeV energy range.These detectors analyse light patterns to infer the direction, energy, and position of neutrino interactions. While likelihood-based methods have traditionally been used for reconstruction, recent advancements in machine learning offer compelling alternatives. Furthermore, detecting neutrinos becomes particularly challenging when dealing with high background rates in the detector. Machine learning techniques have been developed not only to categorise events based on the nature of the interaction, but also to classify events as background or signal.

These machine learning techniques encompass a variety of approaches, ranging from Boosted Decision Trees to deep learning techniques. This contribution aims to provide an overview of the primary machine learning algorithms currently employed in KM3NeT. In addition, it will explore future developments, such as the introduction of transformers for

event reconstruction and the implementation of GraphNeT for event selection and reconstruction.Speaker: Jorge Prado González (KM3NeT) -

2:50 PM

Q/A 10m

-

3:00 PM

Convolutional Neural Network for track reconstruction and PID in the HA-TPC of ND280 upgrade 15m

The T2K near detector ND280 is currently being upgraded to prepare the second phase of data taking T2K-II, with the purpose of confirming at 3σ level if CP symmetry is conserved or violated in the neutrino oscillations. For this upgrade, new detectors are currently being installed including so-called High-Angle Time Projection Chambers. They are instrumented with Encapsulated Resistive MicroMegas detectors as readout plane which are used to spread the charge on several pads thus allowing for a better reconstruction of the track position.

To take benefit of this feature, a new reconstruction algorithm was developed using the ResNet50 Convolutional Neural Network to extract the track parameters and perform particle identification. This technique was trained and tested on simulations proving the capabilities of the CNN to reconstruct the particles momentum and perform particle identification.Speaker: Anaelle Chalumeau (LPNHE) -

3:15 PM

Q/A 10m

-

3:25 PM

Advancing neutrino interaction reconstruction: a deep learning strategy in highly-segmented dense detectors 25m

Deep learning methods are becoming indispensable in the data analysis of particle physics experiments, with current neutrino studies demonstrating their superiority over traditional tools in various domains, particularly in identifying particles produced by neutrino interactions and fitting their trajectories. This talk will showcase a comprehensive reconstruction strategy of the neutrino interaction final state employing advanced deep learning within highly-segmented dense detectors. The challenges addressed range from mitigating noise from geometrical detector ambiguities to accurately decomposing images of overlapping particle signatures in the proximity of the neutrino interaction vertex and inferring their kinematic parameters. The presented strategy leverages state-of-the-art algorithms, including transformers and generative models, with the potential to significantly enhance the sensitivity of future physics measurements.

Speaker: Mayeul Aubin (ETH Zurich) -

3:50 PM

Q/A 10m

-

4:00 PM

Coffee break 30m

-

4:30 PM

Machine Learning-Assisted Unfolding for Neutrino Cross Section Measurements 15m

The choice of unfolding method for a cross-section measurement is tightly coupled to the model dependence of the efficiency correction and the overall impact of cross-section modeling uncertainties in the analysis. A key issue is the dimensionality used, as the kinematics of all outgoing particles in an event typically affects the reconstruction performance in a neutrino detector. OmniFold is an unfolding method that iteratively reweights a simulated dataset using machine learning to utilize arbitrarily high-dimensional information that has previously been applied to collider and cosmology datasets. Here, we demonstrate its use for neutrino physics using a public T2K near detector simulated dataset along with a series of mock data sets, and show its performance is comparable to or better than traditional approaches while maintaining greater flexibility.

Speaker: Roger Huang (Lawrence Berkeley National Laboratory) -

4:45 PM

Q/A 10m

-

4:55 PM

Application of Machine Learning techniques to improve event reconstruction in Super-Kamiokande 15m

In this preliminary study we consider and explore the application of Machine Learning algorithms for reconstruction in Super-Kamiokande. To do so simulated event samples have been used. The aim is the development of a tool to be employed in proton decay analysis along with the official reconstruction software (fiTQun). The final goal will be to improve Cherenkov ring detection and reconstruction in multi-ring events.

Speaker: Nicola Fulvio Calabria (Dipartimento Interateneo di Fisica "M. Merlin", Politecnico di Bari) -

5:10 PM

Q/A 10m

-

5:20 PM

Deep Learning Reconstruction for the CLOUD Experiment 25m

Building upon the LiquidO detection paradigm, the CLOUD detector represents a significant evolution in neutrino detection, offering rich capabilities in capturing both spatial and temporal information of low-energy particle interactions. With a 5–10-ton opaque scintillator inner detector volume, CLOUD is the byproduct of the EIC/UKRI funded AntiMatter-OTech project, whose main objective is to make a high-statistics, above-ground measurement of antineutrinos at the Chooz reactor ultra near detector site. Possible physics measurements of CLOUD include the weak mixing angle, solar neutrinos using Indium loading, and geoneutrinos.

This talk focuses on exploiting CLOUD data through development of event reconstruction techniques required for precise measurement and classification of MeV-scale neutrino interactions. Leveraging the intrinsically segmented design of the CLOUD detector, we aim to capitalize on both timing and spatial signals to reconstruct neutrino interaction kinematics quickly and accurately. We outline innovative approaches to event reconstruction, emphasizing Likelihood-free inference density estimation techniques for reconstructing neutrino interaction kinematics. Furthermore, we discuss current work implementing event classification techniques incorporating symmetry-exploiting neural networks and the pivotal role they will play in background rejection, enhancing the precision of these measurements.

Speaker: Garrett Wendel (Penn State University) -

5:45 PM

Q/A 10m

-

1:25 PM

-

6:30 PM

→

9:30 PM

Conference dinner 3h Die Waid

Die Waid

Waidbadstrasse 45, 8037 Zürich, Switzerland (https://maps.app.goo.gl/XJv6DJzRxaiMj28s5)

-

9:00 AM

→

12:25 PM

-

-

9:00 AM

→

1:30 PM

Day 4 - Morning HCI J4

HCI J4

ETH Zurich

ETH Zürich, Hönggerberg campus, Stefano-Franscini-Platz 5, 8093 Zurich, Switzerland.New tools enabled by machine learning techniques

-

9:00 AM

Implicit Neural Representation for Modeling the Photon Transportation in a LArTPC 25m

Modeling the light propagation in LArTPC with sinusoidal representation networks (SIREN) is scalable and capable of being calibrated using data.

In this talk, I will demonstrate a few applications of the SIREN in position reconstruction and charge-light signal correlation.Speaker: Patrick Tsang (SLAC) -

9:25 AM

Q/A 10m

-

9:35 AM

A differentiable simulator for LArTPCs: from proof-of-concept to real applications 25m

Liquid argon time projection chambers (LArTPCs) are highly attractive for particle detection because of their tracking resolution and calorimetric reconstruction capabilities. Developing high-quality simulators for such detectors is very challenging because conventional approaches to describe different detector parameters or processes ignore their entanglement (ie, calibrations are done one at a time), which translates into a poor description of the underlying physics by the simulator. To address this, we created a differentiable simulator that enables gradient-based optimization, allowing an in-situ simultaneous calibration of all detector parameters for the first time. The simulator has been demonstrated to robustly fit targets across a wide range of parameter space using multiple physics samples, and therefore provides a strong proof-of-concept demonstration of the utility of differentiable detector simulation for the calibration task. In this talk, I will focus on the next steps needed in order to bring this idea from proof of concept to an integral part of the operation of experiments using LArTPCs. I will discuss different physics aspects that need to be considered when analyzing real data, like presence of electronics noise, estimation of particle segments event-by-event, and reconstruction of a detector-parameters dependent dE/dx. Furthermore, I will present different avenues for software optimization and ways to improve the overall computational performance.

Speaker: Pierre Granger (APC/CNRS) -

10:00 AM

Q/A 10m

-

10:10 AM

Simultaneous high-dimensional calibration with differentiable simulation towards data application 25m

The fidelity of detector simulation is crucial for precision experiments, such as DUNE which uses liquid argon time projection chambers (LArTPCs). Conventional calibration approaches usually tackle individual detector processes and require careful tuning of the calibration procedure to mitigate the impact from elsewhere. We have previously shown a successful demonstration of differentiable simulation using larnd-sim for simultaneous high-dimensional calibration. In the early iteration, we have simplified the treatment for the input data and the stochastic electronic noise. This presentation will show our proposed solution to deal with the complex data, and benchmark the performance with a more realistic differentiable simulator. This will mark a step towards applying this technique on real data from LArTPCs.

Speaker: Yifan Chen (SLAC) -

10:35 AM

Q/A 10m

-

10:45 AM

Coffee break 30m

-

11:15 AM

Differentiable Physics Emulator for Water Cherenkov Detectors 15m

The water Cherenkov detector stands as a cornerstone in numerous physics programs such as nucleon decay search and precise neutrino measurements. Over recent decades, many such detectors have achieved groundbreaking discoveries, with preparations underway for the next generation of advancements. However, like in all other experiments, accurately quantifying detector systematic uncertainties poses a significant challenge, given their intricate impacts on the observed physics. The challenge is further accentuated in upcoming experiments, where the sheer volume of data amplifies the significance of systematic uncertainties.

In a conventional physics analysis pipeline, the understanding of detector responses often relies on empirically derived assumptions, leading to separate calibrations targeting various potential effects. While this approach has yielded insights, its time-consuming nature can limit the timely implementation of analysis upgrades. Moreover, it lacks the necessary adaptability to accommodate discrepancies arising from asymptotic inputs and factorized physics processes.

Our work on the differentiable physics emulator enhances the estimations of detector systematic uncertainties and advances physics inference across all the aforementioned aspects. By developing a novel analysis pipeline based on AI/ML technique, we construct a physics-based detector model that is optimizable with calibration data. Thanks to the power of AI/ML, we can infer convoluted detector effects using a single differentiable model, informed by basic yet robust physics knowledge inputs. Furthermore, the scalability of an AI/ML model surpasses that of conventional methods, positioning it as a robust solution for experiments employing similar detection principles.

Speaker: Junjie Xia (IPMU) -

11:30 AM

Q/A 10m

-

11:40 AM

Advancing Detector Calibration and Event Reconstruction in Water Cherenkov Neutrino Detectors with Analytical Differentiable Simulations 15m

Drawing statistical conclusions out of experimental data entails to compare it to the physics model predictions of interest. In modern particle physics experiments, producing predictions often requires of three subtasks: 1) Simulating the particles interactions and propagation within the detector, 2) Describing the detector response and tuning its description to calibration data and 3) Developing and testing reconstruction algorithms that extract the summary metrics to be compared.

Consequently, the routine of simulating data is oftentimes distributed into several specialized frameworks, each involving a number of sequential tasks. This situation presents to major caveats. One the one hand, significant efforts are dedicated in large experimental collaborations to coordinate the above steps and to maintain each of the individual frameworks needed. On the other hand, sequentially accounting for each process ignores their correlations, a simplification to coarse for the experimental necessities of most next-generation neutrino experiments.

In this talk, we present a toy model of an integrated simulation using automatic differentiation that showcases a new direction to overcome the above issues. In particular, we focus on the case of a toy water Cherenkov detector to illustrate how a differentiable analytic forward model can be used to perform integrated calibration and reconstruction parameter optimization using gradient descent. This novel approach showcases the potential of machine learning to transform long standing analysis methods in particle physics, and is aimed at advancing the existing detector calibration and reconstruction alternatives for water Cherenkov neutrino detectors in the future.

Speaker: César Jesús-Valls (Kavli IPMU, University of Tokyo) -

11:55 AM

Q/A 10m

-

12:05 PM

Application of Conformal Inference in High Energy Physics 15m

In high energy physics, the detection of rare events and the computation of their properties require precise and reliable statistical methods, with uncertainty quantification playing a crucial role. Nowadays, most research relies on machine learning methods, where the calibration of output probabilities is not always straightforward. How can we then draw conclusions with the required five sigma statistical significance, which serves as the essential threshold for validating new findings?

Conformal prediction methods are becoming one of the main approaches in both academia and industry to quantify uncertainty, calculate confidence intervals in regression tasks, and calibrate probabilities in classification tasks. This presentation will introduce the basic principles underlying conformal prediction and discuss data exchangeability and conformity scores. We will demonstrate the entire approach by applying conformal prediction to a classification task using a Monte Carlo sample from the DUNE experiment.Speaker: Jiri Franc (Czech Technical University in Prague) -

12:20 PM

Q/A 10m

-

12:30 PM

Empirical fits to inclusive electron-carbon scattering data obtained by deep-learning methods 15m

We shall review the results of our recent work on the developments of the NuWro Monte Carlo generator of events. We are working on applying deep learning techniques to optimize the NuWro generator. In the first step, we work on the neural network model that generates the lepton-nucleus cross-sections. We obtained a deep neural network model that predicts the electron-carbon cross-section over a broad kinematic region, extending from the quasielastic peak through resonance excitation to the onset of deep-inelastic scattering. We considered two methods of obtaining the model-independent parametrizations and the corresponding uncertainties based on the ensemble method - the bootstrap aggregation and the Monte Carlo dropout. The loss is defined by $\chi^2$ that includes point-to-point uncertainties. Additionally, we include the systematic normalization uncertainties for each independent set of measurements. We compared the predictions to a test data set, excluded from the training process, theoretical predictions obtained within the spectral function approach, and additional measurements not included in the analysis.

Speaker: Beata Kowal (University of Wroclaw) -

12:45 PM

Q/A 10m

-

12:55 PM

Uncertainty Propagation in Neutrino Reconstruction Models 15m

With the rising importance of large sequential models in neutrino imaging and reconstruction tasks, a robust estimation of uncertainty at each stage of reconstruction is essential. These chained models produce valuable physical representations of the evolution of a neutrino interaction, which are of interest to many disparate fields of study. This talk will discuss methods available for estimating aleatoric and epistemic uncertainties via propagation of input uncertainties and ab initio, learning from input distributions.

Speaker: Daniel Douglas (SLAC National Accelerator Laboratory) -

1:10 PM

Q/A 10m

- 1:20 PM

-

9:00 AM

-

9:00 AM

→

1:30 PM