Speaker

Description

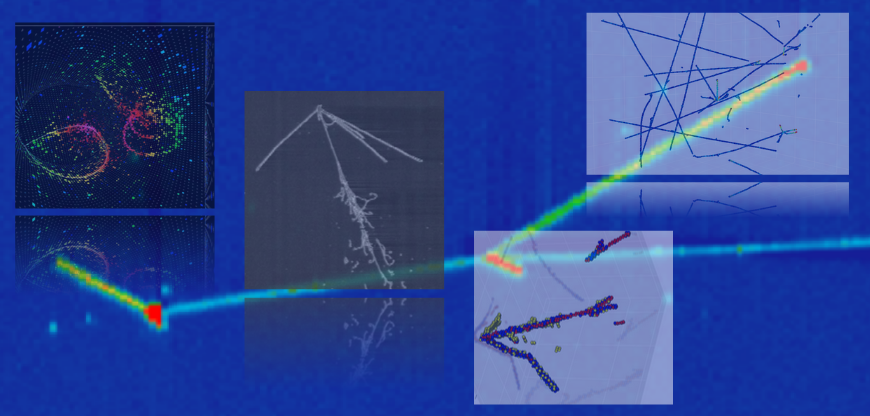

A key challenge in the application of deep learning to neutrino experiments is overcoming the discrepancy between data and Monte Carlo simulation used in training. In order to mitigate bias when deep learning models are used as part of an analysis they must be made robust to mismodelling of the detector simulation. We demonstrate that contrastive learning can be applied as a pre-training step to minimise the dependence of downstream tasks on the detector simulation. This is achieved by the self-supervised algorithm of contrasting different simulated views and augmentations of the same event during the training. The contrastive model can then be frozen and the representation it generates used as training data for classification and regression tasks. We use sparse tensor networks to apply this method to 3D LArTPC data. We show that the contrastive pre-training produces representations that are robust to shifts in the detector simulation both in and out of sample.

| Type of contribution | Talk: 30 minutes. |

|---|